Machine vision systems are immensely diverse. This specification is based on a conceptual model of what constitutes a machine vision system’s functionality. Making good use of the specification requires an understanding of this conceptual model. It will be touched only briefly in this section, more details can be found in Annex B.

A machine vision system is any computer system, smart camera, vision sensor or even any other component that has the capability to record and process digital images or videostreams for the shop floor or other industrial markets, typically with the aim of extracting information from this data.

Digital images or video streams represent data in a general sense, comprising multiple spatial dimensions (e.g. 1D scanner lines, 2D camera images, 3D point clouds, image sequences, etc.) acquired by any kind of imaging technique (e.g. visible light, infrared, ultraviolet, x-ray, radar, ultrasonic, virtual imaging etc.).

With respect to a specific machine vision task, the output of a machine vision system can be raw or pre-processed images or any image-based measurements, inspection results, process control data, robot guidance data, etc.

Machine vision therefore covers a very broad range of systems as well as of applications.

System types range from small sensors and smart cameras to multi-computer setups with diverse sensoric equipment.

Applications include identification (like DataMatrix code, bar code or character recognition), pose determination (e.g. for robot guidance), assembly checks, gauging up to very high accuracy, surface inspection, color identification, etc.

In industrial production, a machine vision system is typically acting under the control and supervision of a machine control system, usually a PLC. There are many variations to this setup, depending on the type of product to be processed, e.g. individual parts or reel material, the organization of production etc.

A common situation in the production of individual work pieces is that a PLC informs the machine vision system about the arrival of a new part by sending a start signal, then waits until the machine vision system has answered with a result of some kind, e.g. a quality information (passed/failed), a measurement value (size), a position information (x- and y-coordinates, rotation, possibly z-coordinate, or full pose in the case of a 3D system), and then continues processing the work piece based on the information given by the vision system. Traditionally, the interfaces used for communication between a PLC and a machine vision system are digital I/O, the various types of field buses and industrial Ethernet systems on the market and also simply Ethernet for the transmission of bulk data.

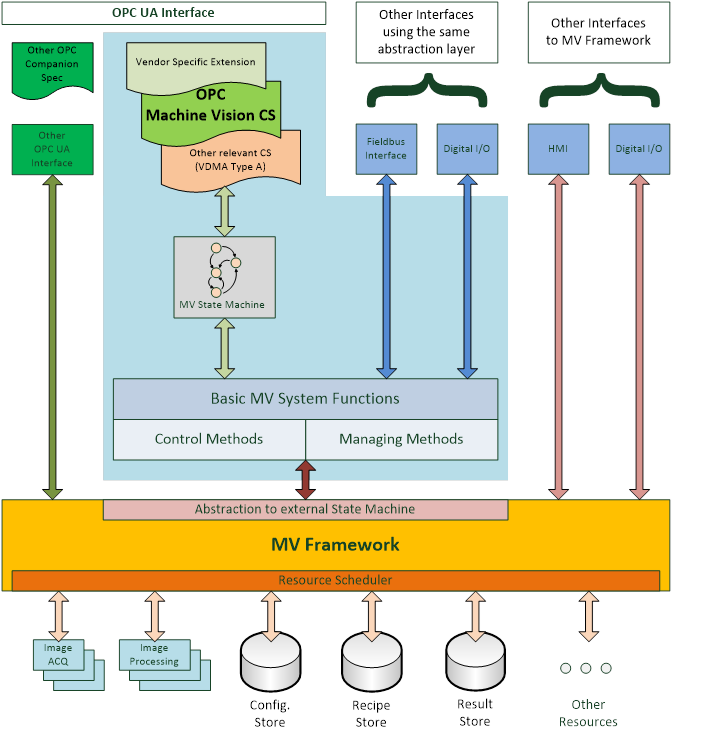

Figure 1 gives a generalized view on a machine vision system in the context of this companion specification. It assumes that there is some machine vision framework responsible for the acquisition and processing of the images. This framework is completely implementation specific to the system and is outside the scope of this companion specification.

This underlying system is currently presented to the “outside world”, e.g. the PLC, by various interfaces like digital I/O or field bus, typically using vendor specific protocol definitions. The interface described in this specification may co-exist with these interfaces and offer an additional view on the system or it may be used as the only interface to the system, depending on the requirements of the particular application.

The system may also be exposed through OPC UA interfaces according to other companion specifications, for example, DataMatrix code readers are by their nature machine vision systems but can also be exposed as systems adhering to the Auto ID specification. And system vendors can of course add their own OPC UA interfaces.

This companion specification provides a particular abstraction of a system envisioned to be running in an automated production environment where “automated” is meant in a very broad sense. A test bank for analyzing individual parts can be viewed as automated in that the press of a button by the operator starts the task of the machine vision system.

This abstraction may reflect the inner workings of the machine vision framework or it may be a layer on top of the framework presenting a view of it which is only loosely related to its interior construction.

The basic assumption of the model is that a machine vision system in a production environment goes through a sequence of states which are of interest to and may be influenced by the outside world.

Therefore, a core element of this companion specification is a state machine view of the machine vision system.

Also, a machine vision system may require information from the outside world, in addition to the information it gathers itself by image acquisition, e.g. information about the type of product to be processed. And it will typically pass information to the outside world, e.g. results from the processing.

Therefore, in addition to the state machine, a set of methods and data types is required to allow for this flow of information. Due to the diverse nature of machine vision systems and their applications, these data types will have to allow for vendor- and application-specific extensions.

The intention of the state machine, the methods, as well as the data types, is to provide a framework allowing for standardized integration of machine vision systems into automated production systems, and guidance for filling in the application-specific areas.

Of course, vendors will always be able to extend this specification and provide additional services according to the specific capabilities of their systems and the particular applications.

Figure 1 – System model for OPC Machine Vision

OPC UA is an open and royalty free set of standards designed as a universal communication protocol. While there are numerous communication solutions available, OPC UA has key advantages:

- A state of art security model (see OPC 10000-2).

- A fault tolerant communication protocol.

- An information modelling framework that allows application developers to represent their data in a way that makes sense to them.

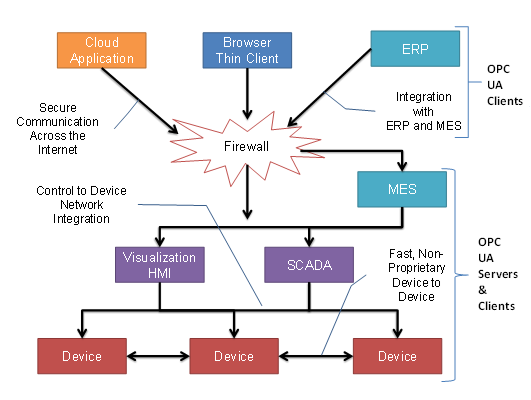

OPC UA has a broad scope which delivers for economies of scale for application developers. This means that a larger number of high quality applications at a reasonable cost are available. When combined with semantic models such as OPC Machine Vision, OPC UA makes it easier for end users to access data via generic commercial applications.

The OPC UA model is scalable from small devices to ERP systems. OPC UA Servers process information locally and then provide that data in a consistent format to any application requesting data - ERP, MES, PMS, Maintenance Systems, HMI, Smartphone or a standard Browser, for examples. For a more complete overview see OPC 10000-1.

As an open standard, OPC UA is based on standard internet technologies, like TCP/IP, HTTP, Web Sockets.

As an extensible standard, OPC UA provides a set of Services (see OPC 10000-4) and a basic information model framework. This framework provides an easy manner for creating and exposing vendor defined information in a standard way. More importantly all OPC UA Clients are expected to be able to discover and use vendor-defined information. This means OPC UA users can benefit from the economies of scale that come with generic visualization and historian applications. This specification is an example of an OPC UA Information Model designed to meet the needs of developers and users.

OPC UA Clients can be any consumer of data from another device on the network to browser based thin clients and ERP systems. The full scope of OPC UA applications is shown in Figure 2.

Figure 2 – The Scope of OPC UA within an Enterprise

OPC UA provides a robust and reliable communication infrastructure having mechanisms for handling lost messages, failover, heartbeat, etc. With its binary encoded data, it offers a high-performing data exchange solution. Security is built into OPC UA as security requirements become more and more important especially since environments are connected to the office network or the internet and attackers are starting to focus on automation systems.

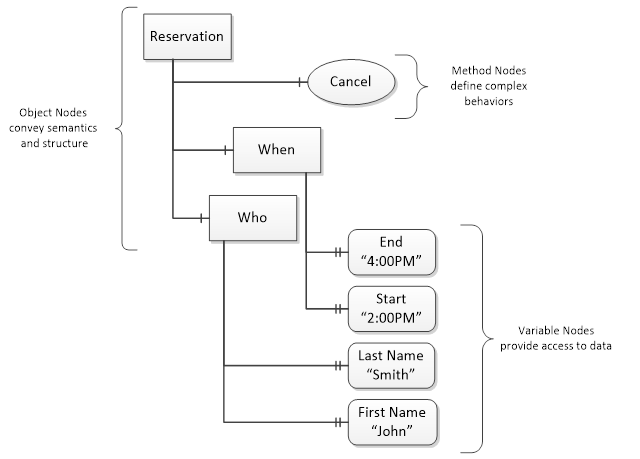

OPC UA provides a framework that can be used to represent complex information as Objects in an AddressSpace which can be accessed with standard services. These Objects consist of Nodes connected by References. Different classes of Nodes convey different semantics. For example, a Variable Node represents a value that can be read or written. The Variable Node has an associated DataType that can define the actual value, such as a string, float, structure etc. It can also describe the Variable value as a variant. A Method Node represents a function that can be called. Every Node has a number of Attributes including a unique identifier called a NodeId and non-localized name called as BrowseName. An Object representing a ‘Reservation’ is shown in Figure 3.

Figure 3 – A Basic Object in an OPC UA Address Space

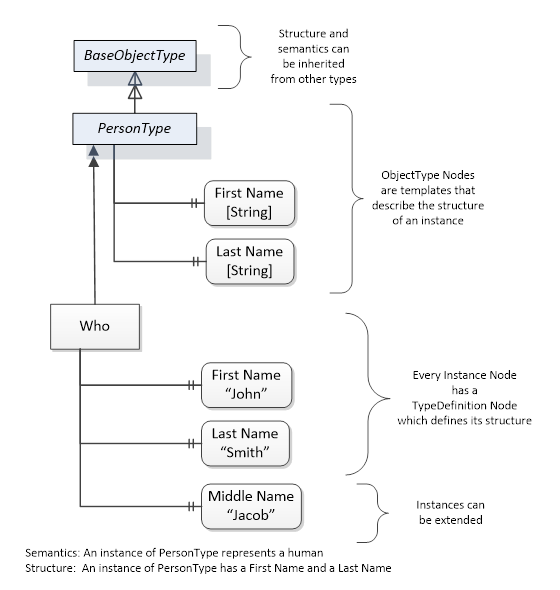

Object and Variable Nodes represent instances and they always reference a TypeDefinition (ObjectType or VariableType) Node which describes their semantics and structure. Figure 4 illustrates the relationship between an instance and its TypeDefinition.

The type Nodes are templates that define all of the children that can be present in an instance of the type. In the example in Figure 4 the PersonType ObjectType defines two children: First Name and Last Name. All instances of PersonType are expected to have the same children with the same BrowseNames. Within a type the BrowseNames uniquely identify the children. This means Client applications can be designed to search for children based on the BrowseNames from the type instead of NodeIds. This eliminates the need for manual reconfiguration of systems if a Client uses types that multiple Servers implement.

OPC UA also supports the concept of sub-typing. This allows a modeller to take an existing type and extend it. There are rules regarding sub-typing defined in OPC 10000-3, but in general they allow the extension of a given type or the restriction of a DataType. For example, the modeller may decide that the existing ObjectType in some cases needs an additional Variable. The modeller can create a subtype of the ObjectType and add the Variable. A Client that is expecting the parent type can treat the new type as if it was of the parent type. Regarding DataTypes, subtypes can only restrict. If a Variable is defined to have a numeric value, a sub type could restrict it to a float.

Figure 4 – The Relationship between Type Definitions and Instances

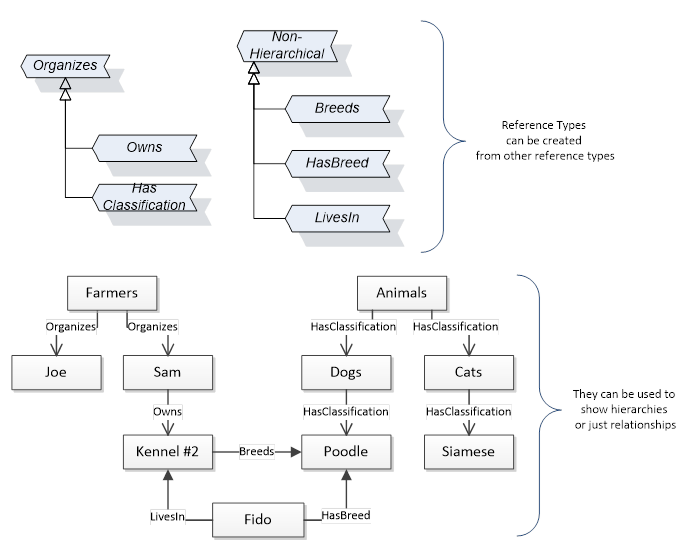

References allow Nodes to be connected in ways that describe their relationships. All References have a ReferenceType that specifies the semantics of the relationship. References can be hierarchical or non-hierarchical. Hierarchical references are used to create the structure of Objects and Variables. Non-hierarchical are used to create arbitrary associations. Applications can define their own ReferenceType by creating subtypes of an existing ReferenceType. Subtypes inherit the semantics of the parent but may add additional restrictions. Figure 5 depicts several References, connecting different Objects.

Figure 5 – Examples of References between Objects

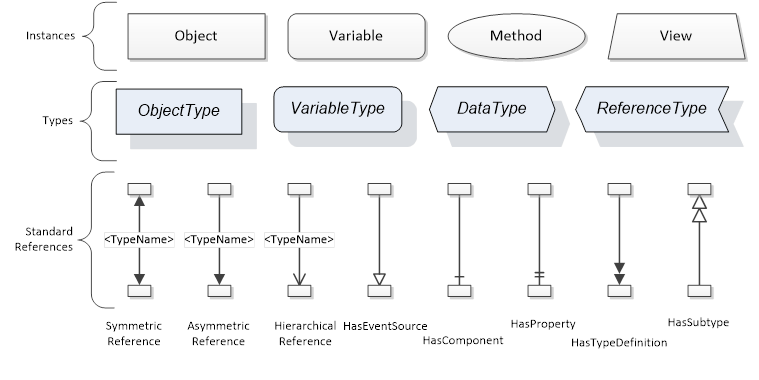

The figures above use a notation that was developed for the OPC UA specification. The notation is summarized in Figure 6. UML representations can also be used; however, the OPC UA notation is less ambiguous because there is a direct mapping from the elements in the figures to Nodes in the AddressSpace of an OPC UA Server.

Figure 6 – The OPC UA Information Model Notation

A complete description of the different types of Nodes and References can be found in OPC 10000-3 and the base structure is described in OPC 10000-5.

OPC UA specification defines a very wide range of functionality in its basic information model. It is not expected that all Clients or Servers support all functionality in the OPC UA specifications. OPC UA includes the concept of Profiles, which segment the functionality into testable certifiable units. This allows the definition of functional subsets (that are expected to be implemented) within a companion specification. The Profiles do not restrict functionality, but generate requirements for a minimum set of functionality (see OPC 10000-7)

OPC UA allows information from many different sources to be combined into a single coherent AddressSpace. Namespaces are used to make this possible by eliminating naming and id conflicts between information from different sources. Namespaces in OPC UA have a globally unique string called a NamespaceUri and a locally unique integer called a NamespaceIndex. The NamespaceIndex is only unique within the context of a Session between an OPC UA Client and an OPC UA Server. The Services defined for OPC UA use the NamespaceIndex to specify the Namespace for qualified values.

There are two types of values in OPC UA that are qualified with Namespaces: NodeIds and QualifiedNames. NodeIds are globally unique identifiers for Nodes. This means the same Node with the same NodeId can appear in many Servers. This, in turn, means Clients can have built in knowledge of some Nodes. OPC UA Information Models generally define globally unique NodeIds for the TypeDefinitions defined by the Information Model.

QualifiedNames are non-localized names qualified with a Namespace. They are used for the BrowseNames of Nodes and allow the same names to be used by different information models without conflict. TypeDefinitions are not allowed to have children with duplicate BrowseNames; however, instances do not have that restriction.

An OPC UA companion specification for an industry specific vertical market describes an Information Model by defining ObjectTypes, VariableTypes, DataTypes and ReferenceTypes that represent the concepts used in the vertical market, and potentially also well-defined Objects as entry points into the AddressSpace.